Enhancing Passenger Interaction in Autonomous Vehicles

Seong Hee Lee

Yunqin Wang

Juran Li

Minwei Jiang

Instructed by Professor Francois Guimbrietere

Making Passenger Autonomous Vehicle Interaction as natural as interactions with a Human Driver

Abstract

In this research project, we identify the problems passengers have in Autonomous Vehicles (AV) such as understanding the driving intent of the AV in unexpected situations, making minor route changes along the trip, and the “Last 100-meter Problem”. We have found that these issues combined demonstrate how passengers are unable to naturally understand and communicate information with an AV as they could with a human driver.

So in our design, we focus on establishing systems that allow passengers to communicate naturally with the AV as they could with a human driver when no driver is present. (above level 4) Our design includes natural user interaction in AVs such as gesture interaction, interactive window displays, and sound alert systems. With these elements, our proposal envisions natural communication between AVs and passengers

What happens when no human driver is present?

Overview of the Final Design

Overview of the main features in our design. Full Youtube Video.

Introduction

Imagine you are in an Autonomous Vehicle trying to pick up your friends. Along the way, you want to stop by a sandwich store to get breakfast but you don’t know exactly where so you want to drive by a local area with a lot of restaurants so that you can search for a good place to eat. Additionally, you want to drive by a quiet route near the park to enjoy the weather as you eat your sandwich. Finally, you have to pick up your two friends who are at different locations. How would you communicate these specific driving needs to your AV without a human driver present?

To easily communicate to your AV as you can communicate to a human driver is a necessity for passengers to be able to have a comfortable ride and reach their true destinations. Human driving habits reveal that people don’t always have a specific destination in mind and tend to stop in search of specific locations. Additionally, people tend to make minor route changes towards the end of their rides revealing “the last 100-meter problem”. Whether future AVs can provide these nuanced interactions to passengers is a lingering question that needs to be addressed. However, current HCI research on natural passenger interaction inside of fully autonomous vehicles is sparse due to the limitation of fully operating AVs (above level 4) in the industry and a focus heavily on semi-autonomous vehicles that have more impending HCI Issues.

To combat this problem, we have identified the main problems that passengers in AVs face through paper research and expert interviews. Through this process, we designed solutions to these issues using natural user interactions such as interactive window screens, gestures, voice commands, and audio.

Current Passenger Interaction with Autonomous Vehicles involves a secondary device such as a mobile phone or the assistance of service staff. We are looking at ways we can use to allow for direct communication from the passenger to the Autonomous Vehicle.

Research & Design Premise

Research & Design Premise

Before proceeding, we built our research and design on several premises. The design is discussed inside a fully autonomous vehicle with no driver present. The main technology used includes gesture recognition, voice recognition, eye tracking, and a window display.

Fully Autonomous Vehicle

The AV without a human driver is able to deal with all situations on the road regardless of weather conditions, traffic, pedestrians behaviors, etc. According to USDOT, AVs are generally categorized into five levels. Our design is ideally suited for the ultimate level 4 and level 5 AV, where there is no driver present in the vehicle an interaction (NUI) using voice or gesture-based interfaces has become familiar, we design the interaction with the AV based on gesture recognition, voice recognition, and eye-tracking through the window interface. Such technologies have been adopted in some AV products on the market, like BMW 5 Series Gesture Control and Mercedes-Benz Head-Up Display. Research also looks into the usage of gestures and voice commands to communicate with the AV.

NUI technology

As natural user interaction (NUI) using voice or gesture based interfaces has become familiar, we design the interaction with the AV based on gesture recognition, voice recognition and eye tracking through the window interface. Such technologies have been adopted in some AV products on the market, like BMW 5 Series Gesture Control and Mercedes-Benz Head-Up Display. Research also looks into the usage of gestures [1] and voice commands [6] to communicate with the AV.

Waymo Case Study

Industry Example Case Study - Waymo

We conducted a specific case study looking into how current level 4 autonomous vehicles were achieving this task. We did a specific case study looking into Waymo as it is one of the few level 4 fully autonomous vehicles operating on the road. In a Waymo car, no drivers are present and the passenger sits in the back seat.

We investigated the different types of passenger interactions that occur in Waymo vehicles.

1. Limited Passenger Controls

One noticeable thing inside of Waymo Cars is that there are just four user controls for the passenger. Users have the option of pulling over, starting the ride, locking and unlocking the door, or calling for help.

2. Limited Passenger Interface

As stated in their official post about their user screens, Waymo states that they have tried to show as much information as possible to their users in order to build initial trust. Waymo takes the developer's view of what cars can see and interprets that into their user screen to show the users all the information that the car is able to detect.

As stated in their official post about their user screens, Waymo states that they have tried to show as much information as possible to their users in order to build initial trust. Waymo takes the developer's view of what cars can see and interprets that into their user screen to show the users all the information that the car is able to detect.

The Waymo screen goes even further to display to the user more specific information on where it is going. It shows detailed renderings of white highlighted images on more specific image detections occasionally. It also shows traffic light information and file destination information.

3. Motion Sickness

The final problem with these screens is that it is displayed on the backseat of a car. The discrepancy between a still screen and the motion of a moving vehicle can cause high amounts of motion sickness.

Interview with Waymo Rider - JJRicks

Waymo User Study

We had an opportunity to interview a Youtuber who was gone on over 180 Waymo rides - JJRICKS.

Here is an overview of the interview we conducted with him.

Users do not pay close attention to Waymo screens once trust is built.

One of the passenger's motivations to take an autonomous vehicle is to free themselves from driving and spend time on whatever they are more interested in. Thus, nobody is inclined to stare at a screen and monitor the vehicle throughout the whole ride.

Static screens in a moving car cause a lot of motion sickness.

It is common that staring or even glancing frequently at a static screen on a moving vehicle can cause headaches or nausea.

Extensive unnecessary information is displayed.

Experience can be too overwhelming if too much information is shown to a passenger since passengers care almost only about where to go and when will arrive.

A lack of information when something goes wrong.

However, when the car is stranded in some unexpected situation, no detailed prompts are shown to comfort passengers, which sometimes gets people nervous. It is understandable that being alone in an unfamiliar object and also ignorant of what is going on would be stressful.

Youtube Channel of JJRicks

Identifying Main Problems and Needs of Passengers in Autonomous Vehicles

Problem Statement

Through our research process, we were able to identify 4 main problems that passengers of Autonomous Vehicles have.

Communicating AV Driving Intention in Unexpected Road Situations

If the passenger sees that there is an unexpected roadblock situation, they can look at the driver to confirm that they have noticed that too and trust that they will take appropriate action. However, when inside an AV, as a passenger, you will start to have a number of questions: Has the AV recognized this? Should I step out of the car? Will the AV take another route automatically? Will I have to input a different route? Without a driver present in the vehicle, the passenger may feel great discomfort when encountering an unexpected situation in an AV.

Though future AV technology claims to provide fully automated and safe technology, passengers still will have questions and concerns when encountering an unforeseen situation. We anticipate that methods for communicating AV driving intent will be a necessity in helping with the acceptance of automated vehicles, decreasing anxiety, and helping reduce mental workload.

Research on how explanations affect the acceptance of automated vehicles has shown that participants preferred an awareness of why an autonomous navigation aid chose specific directions. Additionally, research has shown that alert systems in automated vehicles decreased drivers’ anxiety and increased their sense of control, alertness, and preference for the AV. Prior research on automation in flight decks has also shown that explanations can help to avoid automation surprise and negative emotional reactions.

In our interview with Professor David Sirkin, he explained to us that displaying driving intent could help users effectively create a mental model of how the system works. Things such as informing passengers of the AV's next actions and even displaying its uncertainty could help build acceptance of new AV technologies.

Vehicle automation offers promise for improving safe transportation, access to mobility, and quality of life. However, in the early stages of automation, human drivers remain an integral component of the system making passenger assistance systems necessary. Even with fully autonomous vehicles that require no human driver (level 4,5), systems for passenger assistance and an explanation of the autonomous vehicle’s intent will be necessary for passengers when they are met with unexpected road situations during their trip. Therefore we have identified communicating AV Driving Intention in Unexpected Situations to be a need for passengers in an autonomous vehicle.

Communicating Dynamic Driving Navigation Needs to AV

When you wish to stop by multiple destinations before you reach your final destination, you can tell your driver to make those certain stops. However, in an AV, passengers face a limitation in communicating their dynamic driving intentions.

Research on adaptable maps shows that users face a mental burden when trying to achieve dynamic driving tasks on a GPS map. Inside an AV, trying to input these dynamic controls while driving to your destination can cause a great mental strain on users. Additionally, users’ navigation needs are sometimes uncertain and dynamic. In manual driving, for instance, users often drive along streets to search for places of interest (restaurants or shops) or moving objects or people (waiting friends) and change their minds about their destinations.

In situations where users want the AV to perform driving tasks in accordance with their dynamic driving needs, automated systems that require the passenger to simply input one destination will not meet the needs of passengers. Therefore we identify communication of dynamic driving commands to be another need of passengers in Autonomous Vehicles.

" The Last 100-meter Problem ”

Towards the end of a taxi or shared ride, passengers often ask drivers to make minor route adjustments to reach their true destinations, which are different from those indicated on drivers’ GPS maps. Passengers are able to communicate this information effectively to a taxi driver, but when inside of an AV, current technology faces limitations in achieving this task.

Research on road robot navigation shows that insufficient precision in client-side maps results in an inability to accurately mark target locations. With future AV technology highly dependent on GPS maps, inaccurate map data, unmarked route changes, and short destination travel all appear to be challenges that we will come to face.

Additionally, map-reading imposes a massive cognitive burden on users. Towards the end of the trip, passengers rely on communication (through gestures, voice, shared view…etc) with their driver to truly reach their final destinations instead of relying on the map. Finding a way for natural communication to be possible in AVs will be necessary for solving the last 100-meter problem.

Unclear Passenger Communication Tools

The last challenge we identify is that it is unclear to the passenger what controls they use to communicate with the AV.

Prior research has looked at ways of using Natural User Interaction to address these issues such as gestures, voice commands, and visual screens. There is a multitude of new technologies that are being developed to enhance passenger interaction in AVs such as Mixed-Reality Screens, eye-tracking, and haptic feedback in chairs. However, what passenger communication tools would be the most effective for passengers in an AV is an area that needs further research. Further research and field experiments that look into the effectiveness of each of these interaction methods will be needed in the upcoming future for the adaptation of these technologies.

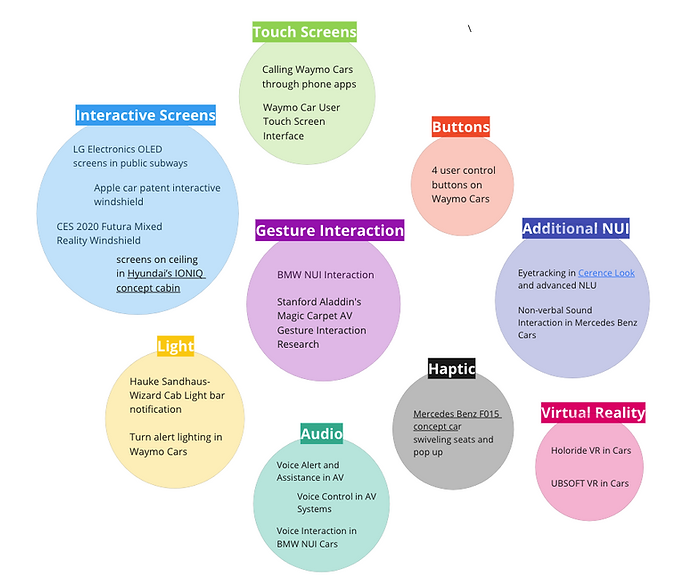

A multitude of different passenger interaction methods is being introduced into the market. Below we have created a summary of the different types of passenger communication tools in AVs that are being introduced.

Overview of the different types of passenger interaction methods in Autonomous Vehicles

Get Insightful View from Professionals

Expert Interviews

We Interviewed 5 different experts professors and head designers to understand the main problems surrounding autonmous vehicles.

Expert - David Sirkin

Associate Professor

Interaction Design

Stanford University

1. Consider human limitations in monitoring automated systems

2. Consider the parallax problem in a window design

3. What information can the car display about its uncertainty?

4. Explore, prototype, and test multiple times to solidify your design.

Expert - Wendy Ju

Associate Professor

Jacobs Technion, Cornell Institute

Cornell Tech and the Technion

1. Design an ambient visual screen to solve the motion sickness when looking at a virtual screen inside the car.

2. Consider the multi-passenger scenario.

Expert - Hauke Sandhaus

Ph.D. student

Passenger Interaction with Autonomous Vehicles

Information Science

Cornell University

1. Focus on Level4 Autonomous Vehicle.

2. A design combining both visual and audio methods.

3. Use ambient lighting to signal passengers the change of motion without awareness.

Designer - Kyungmin Kim

Head Product Design

Manager @Nuro

1. Communicate effectively what the AV is going to do when it is seen in the public.

2. Solve the misunderstanding between the bot and customers.

3. Make it as natural as possible. Don't enlarge the gap between the traditional cars and AVs.

Journey to the Final Solution

Design Process

Our entire workflow was not a linear style; instead, we went back and forth between each stage to continuously refine the problems to be solved and polish the solution we proposed. We resorted to assorted online resources, in field professionals and insightful scholars, and finally tested our design with potential users.

Workflow Diagram

Ideation

In the first round of ideation, we brainstormed for innovative and crazy ideas that might be helpful.

To give a more concrete setting, we initially considered dozens of scenarios that would be encountered in the case of level 4, and in turn, hoping that these problems could be solved in our design.

To the right is our ideation board for choosing the scenarios we would like to dive into.

.png)

Scenario Ideation

Then, we started to sketch potential solutions for selected scenarios and to receive feedback from the other team members. Some solutions were welcomed and resonated in the team, while some idea was too vague or hard to understand.

Pick up a Friend

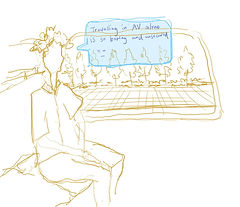

Travelling in Wildness

Below illustrate a scenario we chose at this early stage.

Imagine a passenger in a car, driving at a high speed, very boring. In order to increase the experience of the passenger in the car, the window displays different kinds of functions for the passenger to choose, play the music that the passenger likes, or screen games, etc.

Showing Information of a Spot

Hologram Driver

Rapid Prototyping

As ideation went on, we fixed on the solution of window display. To experiment with this proposal, we made a low-fidelity physical prototype and asked participant to perceive and gave us early feedback.

Physical Prototype

1. Motion Sickness

We used a blurry window view to simulate a vehicle moving at high speed to demonstrate the motion sickness issue

2. Selecting Locations

We used a paper-cut frame to simulate an object being captured by the AV, and showed how the frame would follow the object in the moving field.

3. The Parallax Problem

We moved the scene further from the window to illustrate the parallax issue that the object captured might seem to be different based on where the passenger is located.

Low-Fidelity Design

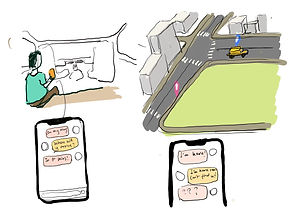

Later, we moved onto the digital stage and implemented our design with interactive figma design and static screen mock-ups. To clearly show our design, we organized a virtual jjourney on an autonomous vehicle and integrated all the situation into this travelling. This solution also involved use of phone app, which turned out to distract uersers from our main window display system. Thus, we eliminated this idea in our final proposal.

a. Summon an AV

c.100 Meter Problem Solution

b. Set the Destination

d. Pick up a Friend

Solution to an entire journey in an AV

Final Design

Through this process, we have created our final design that aims to provide passengers a solution to the different problems they have in Autonomous Vehicles.

Design Goal

Our design goal is to make interactions that happen between a passenger and an AV as natural as interactions with a human driver.

Main Features

Design Features

Our final design uses 4 main features to help passengers obtain natural communication with the Autonomous Vehicle.

Voice Control

Passengers can input voice commands to the AV, taking to the AV as they could to a human driver.

Gestures

We often use gestures to show our drivers where we want to go or what we are referring to. We have decided to use gestures in our system to allow for this natural communication.

Audio

We have decided to add voice assistance to the AV so that the passenger can communicate naturally with the AV.

Window Display

Acts as a shared view between the passenger and the Autonomous Vehicle. Passengers can use this display to communicate their intent and view to the AV and confirm that the AV has recognized it too.

Audio

Passengers can speak to the AV as they would speak to a driver.

Voice Control

The AV offers voice assistance and chimes to show recognition and intention.

Gestures

“Drop me off over there!” Passengers can point to the screen as they would point to a driver to show their drop-off intention.

Window Display

Direct display of the AV and passenger driving intent communication on a window with a transparent display to the outside world through paper research and expert interviews.

Design Solution

Design Solution

1. Communicating Dynamic Driving Needs

Near your destination, you realize that you inputted the wrong destination. You had to go to MoMA not Guggenheim. You would also like to stop by a flower shop on the way and see what Central Park looks like from the outside. How can you communicate to your Autonomous Vehicle that you would like to make a route change and stop by all these different locations?

.png)

Hmmm... I want to go on a different route because I want to see what the newly opened bakery looks like.

Solution to communicating dynamic driving needs to the autonomous vehicle

We often tell our driver of your specific driving needs that cannot be effectively communicated through a GPS map. We have used this same system in an Autonomous Vehicle where the passenger can simply communicate to the AV of their driving needs and the AV can adjust to these needs accordingly.

2. Solution to "The Last 100 meter Problem"

You are in an Autonomous Vehicle and now approaching your destination, the Guggenheim. You want to be dropped off across the street where there is less traffic so you could get it more quickly. How can you communicate to your AV that you would like to be dropped off at that exact location?

Solution to the last 100 meter problem in autonomous vehicles

During the end of a ride, passengers often use gestures along with speech to communicate to the driver where they would like to be dropped off. We would like to use this same natural communication method to have passengers communicate to the car of their exact drop-off location.

Additionally, we have focused this design to be provided mainly at the front window of the car. When this design is provided in the side window, it provides challenges to the users in that the side window would show a much more shifting blurry view compared to the front window of the car. Additionally, selecting a dropoff location throug the side window would be an unlikely situation because by the time the car has reached the side window, that means that the car has already passed the drop off location.

3.Alert System

You are inside of an autonomous vehicle but the car has encountered a mechanical malfunction. How should the car alert the passengers of this issue?

Passenger Attention Aware alert system design

During our interview with Professor David Sirkin, we learned about Mica Endsley's situation awareness levels. Raising situational awareness includes three 3 levels: alert, comprehend, and projection. We are focusing on the first level, alert, to help passengers recognize that there is a malfunction when they are engaged in a different activity.

4. Displaying AV Driving Intent in Unexpected Situations

You are driving to work but the usual path that you take is blocked with a construction. What should the AV do in this unexpected situation? Our design displays how the AV will show the driving intent when faced with an unexpected situation by displaying a new route on the window and alerting the passenger.

Solution to communicating driving intent to passengers in unexpected road situations

In this design, the autonomous vehicle will communicate to the passenger what its driving intent is when it is met with an unexpected construction block on the road. This will assure the passenger of what will happen next and reduce their anxiety while helping build a mental model of the system's capabilities.

User Study

We conducted 10 user interviews and asked our users about the system. Although received generally positive feedback on the intention of our design and addressing the main problems, we still heard from the participants that some of the aspects of our solution could be improved. Here are the major insights and how to improve them.

Research Protocol

Scenarios

To do an experiment with the design, the team recruited some volunteer testers and let them describe their feeling during the journey.

The team members first walked through all the features of the design with each of the participants and then asked them to imagine several specific scenarios. Questions asked to include "What features you would use to solve the problem? ", " What features you won't use?", "What else problems do you imagine you would be faced with?", etc.

After all the user research, the team organized and compared all the answers, and summarized the most common feedback.

a. Scenario 1

The Last 100-meter Problem

You are in an Autonomous Vehicle and you are now approaching your destination. However, due to the traffic outside your destination, you would like to be dropped off at a specific location across the street.

How can you communicate to your AV that you would like to be dropped off specifically at that location?

b. Scenario 2

Dynamic Driving Needs

You are tourist in New York in an Autonomous Vehicle and you have set your destination to MoMa. However, you are hungry and along the way you want to stop at the new bakery that is popular on instagram. Additionally, you would like to get a glimpse of what Central Park looks like. You also would like to see what the Guggenheim museum looks like.

How can you communicate to your Autonomous Vehicle that you would like to take a route that can stop by all these locations or take a look at these locations along the way?

c. Scenario 3

Alert Systems

Inside of an AV, you are focused on a different activity. However, there is a machine malfunction that has occurred in the car.

How can the car alert you of this situation? What method of alert would you prefer?

d. Scenario 4

Driving Intent

Communication

You are driving to your workplace in an AV but the usual road you take is blocked by construction. What should the AV do?

What would you want the AV to do in this situation?

User Study

User Feedback & Reflections

Reduce Chimes

Some users thought that the chimes could be too much at times.

We believe that this could potentially not be an issue if it was tested in a real car. Since the users were looking at a design that focused specifically on situations that required alert, the chimes could have seen excessive. However, in a real situation, most of the time cars would not be making a chime sound the frequency in which the users will hear the chime would be much lower.

Confirmation Messages

Users would like confirmation messages to be displayed to make sure certain choices made by the Autonomous Vehicles can be confirmed. For example, in the route resetting scenario, users would like the car to ask “Are you sure you want to reset the route to MOMA?”

We think that this is important feedback and something we should include in our future design. We should add a confirmation message in case the feedback command recognized by the AV could be inaccurate.

Additional Travel Information

Users would like to see more information displayed on the map about how long it will take and the estimated arrival time.

We also believe that this is important feedback we received from the users. This part would require us to think about how we should display this additional information. This comment made us think about different locations in which we should include the map, estimate arrival time, and other types of additional information the user would like to see.

Parallax Problem

Many users were concerned about the potential parallax issue with the last 100 meter problem.

This parallax problem could be solved with head/eye-tracking technology that captures a view that the user sees. Additional features that would be important in this design are a confirmation screen that allows the users to see the view that they captured and how it is reflected directly on the screen. Additionally, onboarding the users on how to use this feature would be necessary step for this feature to be effective.

Insufficient info in the alert system

What is after “step out”?

Make instruction more complete, and inform users whether help is coming or they should call the police.

We had feedback from the users about wanting to see more user screens after the alert screen. For this step, since our design-focused mainly on the "alert" step of a situation awareness system, we did not focus on the after steps for this process. We should have explained this more clearly in the user testing protocol. In this design, we are focusing on alerting the users of a situation through lights on peripheral windows, chimes, and voice assistance systems.

Initial Onboarding of System Technology

These interaction technologies are quite new and might require a certain amount of time before users can get accustomed to them.

We think this is an important challenge that our design will face. Though these systems provide natural interaction like passengers would have with a human driver, the interaction methods used in these vehicles are quite new and many users would need some time to get accustomed to them. Realizing that you can communicate to a car like you can communicate to a human could be a great challenge for some users.

Overall Reflection

Through user studies, we were able to identify gaps in our design such as the lack of additional driving information, interaction methods to access these driving methods, and confirmation sections. Additionally, we were able to understand user problems and patterns of interaction in cars and realize the differences in human behavior when they are interacting with a machine instead of a human driver. Finally, we were able to identify different types of technology that would be required to make this system possible.

We also were able to explore different design solutions to the problems that we were able to identify in cars. Through this process, we were able to think about the different interaction methods that may be necessary for people to have inside cars and think about what method would be the most natural for the user.

Design Limitations &

Future Work

The greatest challenge that our design faces is understanding how users would interact with this new type of technology. There is yet to be understood how users will behave in situations that require them to use a new type of technology that has not been used before.

Since our design has not been tested inside a real car environment, there is yet to be understood what this design will feel like when it is placed inside a moving car environment. Additionally, our design has certain limitations when it is used by people with certain disabilities.

Impact Assessment

Assessment

In addition to our design, we have done an impact assessment of Autonomous Vehicle technology and the impacts it will have on transportation, infrastructure, energy consumption, and society (employment, data privacy, ..etc).

Transportation

-

Significant congestion reduction could occur if the safety benefits alone are realized. FHWA estimates that 25 percent of congestion is attributable to traffic incidents, around half of which are crashes.

-

Responsible dissemination and use of AV data can help transportation network managers and designers. This data could be used to facilitate a shift from a gas tax to a VMT fee, or potentially implement congestion prices by location and time of day.

-

Crash savings, travel time reduction, fuel efficiency, and parking benefits are estimated to approach $2000 per year per AV

-

Difficulty in communicating nuanced driving needs through limited technology options may end up increasing the travel time for passengers.

Energy Consumption

-

More efficient traffic flow will result in less traffic congestion and, consequently, less idle time for vehicles.

-

Additional fuel savings may accrue through AVs’ smart parking decisions, helping avoid “cruising for parking.”

-

Taeibat et al. (2018): On the other hand, emissions can be increased after AV implementation, mainly because of higher speeds and potential aerodynamic shape changes.

Infrastructure

-

The changes in parking patterns impact the parking facilities and can free up some space in dense urban areas (Millard-Ball, 2019), which can promote active transportation-friendly urban designs.

-

AVs impact traveling behavior, and facilitating traveling can result in urban sprawl and consequently increase the VMT in cities (Milakis et al., 2017).

Society

-

Shared AVs make it more affordable for low-income people to access vehicles without having to make the purchase or pay for the maintenance.

-

The growing access to automobiles will improve the mobility of low-income people and their access to jobs and services.

-

Employment: Autonomously operated trucks may face significant resistance from labor groups. The shared ride industry estimated to be worth 61 billion will be threatened by AVs. Uber and Lyft make up 99% of the shared ride industry. Approximately 2 million workers of shared rides face threats to their jobs.

-

The potential geographical imbalance of AV ride-hailing services may also marginalize low-income people. For example, in the early stages of adoption, AV ride-hailing may be available only in a densely populated and affluent neighborhood

For more information on the social impact of Autonomous Vehicles, check out our report here.

Conclusion

Through this research process, we were able to learn about the different types of HCI challenges that will be present when passengers are trying to interact with Autonomous Vehicles. By testing out different iterations of our designs, we were able to learn about what features are necessary for passengers to have fluent interactions. Additionally, by conducting an impact assessment of the future implications of Autonomous Vehicles, we were able to think about the different social implications that this new technology can bring.

We would like to thank all the people who have helped us in our user interview and given us thoughtful feedback on our design.

We would also like to thank Professor David Sirkin for conducting two sessions of user interviews with us and giving us great feedback on how we could improve our design along with tips for presenting our design to a greater audience.

Finally, we would like to thank Professor Francois Guimbritere and his thoughtful comments throughout the course of this study. They truly helped us improve our design and think critically about what is important in this new age of Autonomous Vehicles.

Our Team!

CARAT Team Members

Appendix

1. Expert Interview Summary Document : link

2. User Interview Summary Document : link

3. Final Poster: https://drive.google.com/drive/folders/1Ak2Xxt6Nlv26NqgAeECAB8DVbzhJv2WC

4. Final Video: https://www.youtube.com/watch?v=zbaaPsK1ze4

5. Initial Design Figma : https://www.figma.com/file/ZYo3eSHjfO4J8GoShBbFTO/CARAT?node-id=0%3A1

References

1. Baldwin, R. (2021, November 29). Waymo reveals details on 5th-gen self-driving Jaguar I-Pace. Car and Driver. Retrieved April 15, 2022, from https://www.caranddriver.com/news/a31228617/waymo-jaguar-ipace-self-driving-details/

2. Preimesberger, C. J. (2022, April 12). Multi-camera 3D-vision provider Nodar secures funding to drive automation. VentureBeat. Retrieved April 15, 2022, from https://venturebeat.com/2022/04/12/multi-camera-3d-vision-provider-nodar-secures-12m-in-series-a-funding/

3. Waymo 360° experience. Waymo. (n.d.). Retrieved April 15, 2022, from https://waymo.com/360experience/

4. FutureCar. (n.d.). Apple files patent for augmented-reality windshield. FutureCar.com - via @FutureCar_Media. Retrieved April 15, 2022, from https://www.futurecar.com/2564/Apple-Files-Patent-for-Augmented-reality-Windshield

5. Futurus. (2019, December 13). Futurus wants to turn your car's whole windscreen into an AR display. New Atlas. Retrieved April 15, 2022, from https://newatlas.com/automotive/futuris-augmented-reality-windshield-hud/

6. CES: Mixed-reality windshield. Gadget. (2020, January 14). Retrieved April 15, 2022, from https://gadget.co.za/ces-mixed-reality-windshield/

7. Car spin 360° gallery. 360 Degree Car Gallery by Motorstreet®. (n.d.). Retrieved April 15, 2022, from https://motorstreet360.com/360-degree-car-gallery.php

8.University, S. B. S., Baltodano, S., University, S., Profile, S. U. V., University, S. S. S., Sibi, S., University, N. M. S., Martelaro, N., University, N. G. S., Gowda, N., University, W. J. S., Ju, W., Nottingham, U. of, Tech, V., University of Michigan Transportation Research Institute (UMTRI), (TUM), T. U. M., & Metrics, O. M. V. A. (2015, September 1). The RRADS platform: Proceedings of the 7th International Conference on Automotive user interfaces and interactive vehicular applications. ACM Other conferences. Retrieved April 15, 2022, from https://dl.acm.org/doi/abs/10.1145/2799250.2799288?casa_token=mAoUCUfKGEQAAAAA%3Abv5eTeCaK-xBVN32bN_wbTIxECF6bY52orhZs3R4mVKa-jhHxad7VMoFVzrEuANZQREhB86HK34QSbQ

9.Nurie Jeong: Experience natural user interface (NUI) with Myoband. PAUL. (n.d.). Retrieved April 15, 2022, from https://paul.zhdk.ch/blog/index.php?entryid=335&lang=en

10 Designworks. (2021, May 4). Design insight: Designing for the future of interaction with natural user interfaces. Designworks. Retrieved April 15, 2022, from https://www.bmwgroupdesignworks.com/design-insights-natural-user-interfa